Seamless Updates with Canary Deployment on AWS EKS: Leveraging Istio, Argo CD, and Argo Workflows

In the dynamic world of cloud-native applications, deploying new features or updates to production without causing disruptions to users is a challenging task. Canary deployment, allows organizations to roll out changes gradually, reducing risks and gathering valuable feedback from a subset of users before a full release. In this comprehensive guide, we will explore how to implement canary deployment on AWS Elastic Kubernetes Service (EKS) using powerful tools like Istio, Argo CD, and Argo Workflows. By combining these technologies, development teams can innovate rapidly while maintaining the reliability and stability of their cloud-native applications.

In this guide, we will delve into the step-by-step process of setting up an AWS EKS cluster, configuring Istio for advanced service mesh capabilities, and simplifying application deployment with Argo CD. Furthermore, we will showcase how Argo Workflows can orchestrate the canary deployment process, providing a seamless and controlled transition to the new version. Let's dive into the world of canary deployment on AWS EKS, where reliability meets innovation, and continuous improvement becomes a reality.

Setting the Stage with AWS EKS

AWS Elastic Kubernetes Service (EKS) is a managed Kubernetes service that simplifies the deployment, management, and scaling of containerized applications using Kubernetes. It provides a reliable and scalable platform for running microservices and applications.

# Create an EKS cluster using the AWS Management Console or AWS CLI

aws eks create-cluster --name my-eks-cluster --role-arn arn:aws:iam::123456789012:role/eks-cluster-role --resources-vpc-config subnetIds=subnet-1a,subnet-1b,subnet-1c,securityGroupIds=sg-1234567890

# Configure the kubeconfig file to access the EKS cluster

aws eks update-kubeconfig --name my-eks-cluster

# Verify the cluster status

kubectl get nodesExample: Setting up an AWS EKS Cluster

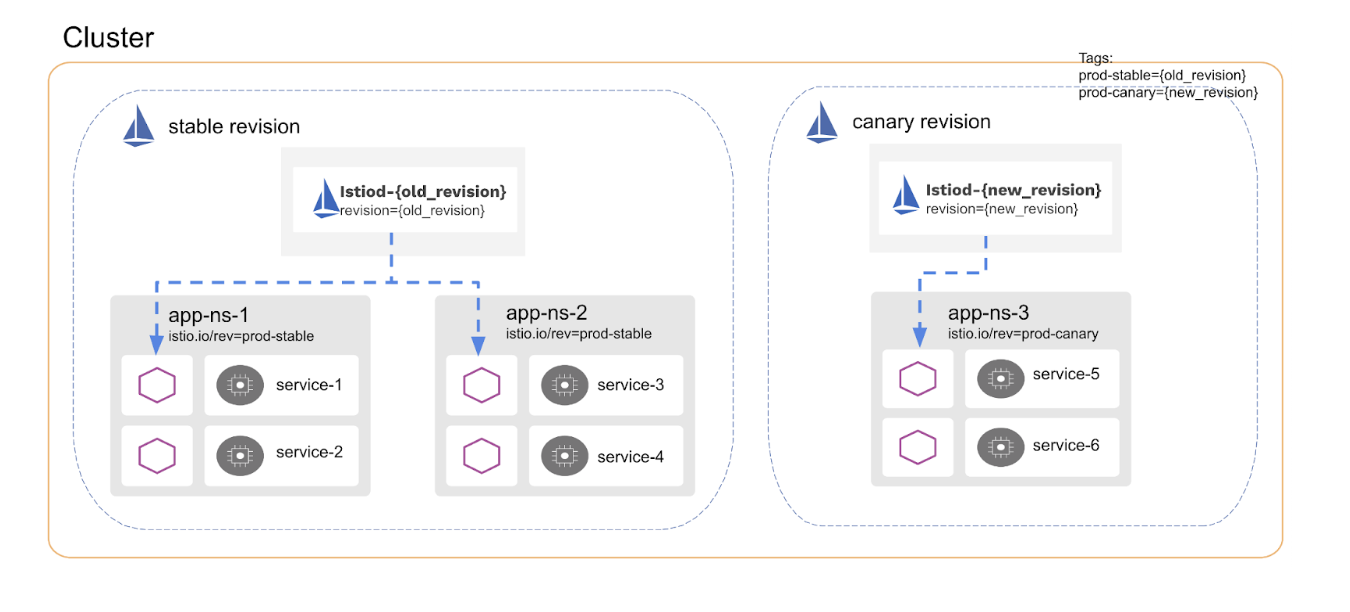

Introducing Istio for Service Mesh Capabilities

Istio is an open-source service mesh platform that offers advanced networking and security features for Kubernetes applications. It provides powerful traffic management, observability, and fault tolerance capabilities.

# Install Istio using Helm

helm repo add istio.io istio

helm repo update

helm install my-istio istio.io/istio

# Enable Istio automatic sidecar injection

kubectl label namespace default istio-injection=enabled

# Create Istio VirtualService and DestinationRule for canary deployment

kubectl apply -f - <Example: Installing Istio and Enabling Traffic Management

Simplifying Application Deployment with Argo CD

Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes. It helps automate application deployments, reduces human errors, and provides a consistent way to manage applications' configuration.

Installing Argo CD and Argo Workflows

To use Argo CD and Argo Workflows for your Canary deployment, you need to install these tools in your Kubernetes cluster.

# Create a namespace for Argo CD

kubectl create namespace argocd

# Install Argo CD using Helm

helm repo add argo https://argoproj.github.io/argo-helm

helm repo update

helm install argocd argo/argo-cd -n argocd

# Expose the Argo CD UI

kubectl patch svc argocd-server -n argocd -p '{"spec": {"type": "LoadBalancer"}}'

Example: Installing Argo CD

# argo-cd-application.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: my-application

spec:

destination:

namespace: default

server:

project: default

source:

repoURL:

path: kubernetes

targetRevision: main

syncPolicy:

automated: {}

Example: Deploying Applications with Argo CD

Orchestrating the Canary Deployment with Argo Workflows

Argo Workflows is an excellent tool for orchestrating complex workflows in Kubernetes. With Argo Workflows, you can define the steps and logic required to execute the canary deployment process seamlessly.

# Install Argo Workflows using Helm

helm install argoworkflows argo/argo-workflows

Example: Installing Argo Workflows

Canary Deployment Workflow

I'll provide a comprehensive overview of the canary deployment workflow using Istio, Argo CD, and Argo Workflows. I'll walk readers through the entire process, from pushing updates to the canary environment to monitoring and gathering feedback during the canary deployment.

# canary-deployment-workflow.yaml

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

name: canary-deployment

spec:

entrypoint: canary-deploy

templates:

- name: canary-deploy

steps:

- - name: image-promotion

template: image-promotion

- - name: traffic-shifting

template: traffic-shifting

- - name: validation-checks

template: validation-checks

onExit: exit-handler

- name: image-promotion

container:

image: myorg/image-promotion:latest

command: ["sh", "-c", "echo 'Image promotion step'"]

- name: traffic-shifting

container:

image: myorg/traffic-shifting:latest

command: ["sh", "-c", "echo 'Traffic shifting step'"]

- name: validation-checks

container:

image: myorg/validation-checks:latest

command: ["sh", "-c", "echo 'Validation checks step'"]

- name: exit-handler

container:

image: myorg/exit-handler:latest

command: ["sh", "-c", "echo 'Canary deployment completed'"]

Example: Creating a Canary Deployment Workflow

To illustrate the canary deployment workflow, let's consider a real-world example. The software company deploys a new version of its e-commerce application, introducing a more efficient product recommendation algorithm. The canary deployment process begins by pushing the new version to the canary environment. Argo CD detects the change and triggers the canary deployment workflow orchestrated by Argo Workflows.

During the canary deployment, Istio directs only 5% of the user traffic to the canary version, while the remaining 95% is directed to the stable version. Observing the behavior of the canary version under real-world traffic conditions helps identify potential issues and gauge its performance.

To ensure the canary version's stability, Argo Workflows runs a series of automated tests against the canary deployment, including API endpoint tests and performance tests. These tests validate the application's functionality and performance against predefined criteria.

Additionally, the software company monitors application metrics and gathers user feedback to assess the canary version's performance. Prometheus and Grafana are used to monitor key performance indicators (KPIs) such as response times and error rates.

Benefits of Canary Deployment

Canary deployment offers several significant benefits for the scenario of deploying cloud-native applications on AWS EKS with Istio, Argo CD, and Argo Workflows:

Risk Mitigation

Canary deployment minimizes the risk associated with introducing new features or updates to the production environment. By initially releasing the changes to a small subset of users (the canary group), any potential issues or bugs can be identified early without impacting the majority of users.

Improved Reliability

Canary deployments help ensure the reliability of applications. By testing the new version under real-world conditions with a limited user base, developers can gather valuable feedback and address any performance or stability issues before a full release.

Smooth Rollback

If the canary version shows unexpected behavior or performance degradation, a rollback is straightforward. Only a small percentage of users are affected, and the impact of the rollback is minimal.

Early Feedback Loop

Canary deployments provide an early feedback loop from real users. This allows development teams to understand how users interact with the new features, gather feedback, and make necessary improvements before scaling the changes to the entire user base.

Optimized Resource Utilization

Canary deployments allow for optimal resource utilization. By directing only a fraction of the traffic to the canary version, computing resources are not fully utilized, reducing any potential overload on the system in case of a production problem.

Seamless User Experience

Users in the canary group experience a seamless transition to the new version. With Istio's traffic management capabilities, the transition can be smooth and controlled, ensuring minimal disruption to users.

Fast Iteration and Continuous Improvement

Canary deployments enable development teams to iterate quickly and continuously improve their applications. This rapid iteration leads to faster innovation and the ability to respond promptly to market demands.

Easy Monitoring and Observability

Canary deployments come with comprehensive monitoring and observability features. Tools like Prometheus and Grafana provide real-time visibility into the canary version's performance, making it easier to detect any anomalies or issues.

Validation of Hypotheses

Canary deployments can be used to validate hypotheses about user behavior or performance improvements. For example, developers can test whether a specific algorithm change leads to better user engagement.

Increased Developer Confidence

With the safety net of canary deployments, developers gain confidence in deploying changes to production. It encourages a culture of continuous integration and continuous deployment (CI/CD).

Conclusion

Canary deployment on AWS EKS with Istio, Argo CD, and Argo Workflows offers a powerful strategy for seamless application updates. By gradually rolling out changes to a subset of users, organizations can mitigate risks and gather early feedback, ensuring improved reliability and a smooth user experience.

The combination of Istio's traffic management, Argo CD's GitOps approach, and Argo Workflows' orchestration capabilities streamlines the canary deployment process. This combination empowers development teams to iterate quickly, respond to user feedback, and maintain a competitive edge.

Canary deployment fosters a culture of continuous improvement, encouraging data-driven decisions based on real user interactions. It optimizes resource utilization and instills confidence in deploying changes to production.

With its many benefits, canary deployment remains a key strategy for delivering high-quality cloud-native applications and staying ahead in the ever-evolving technology landscape.

Ahmet Aydın

Senior DevOps Consultant @kloia